This product includes GeoLite2 data created by MaxMind, available from <a href="http://www.maxmind.com">http://www.maxmind.com</a>.

That's easy enough. The next question is how to get this into my local Postgres database. A bit over a year ago, I very happily gave up on my Dell computer and opted for a Mac. One downside to a Mac is that SQL Server doesn't run on it (obviously my personal opinion). Happily, Postgres does and it is extremely easy to install by going to postgresapp.com. An interface similar enough to SQL Server Management Studio (called pgadmin3) is almost as easy to install by going here.

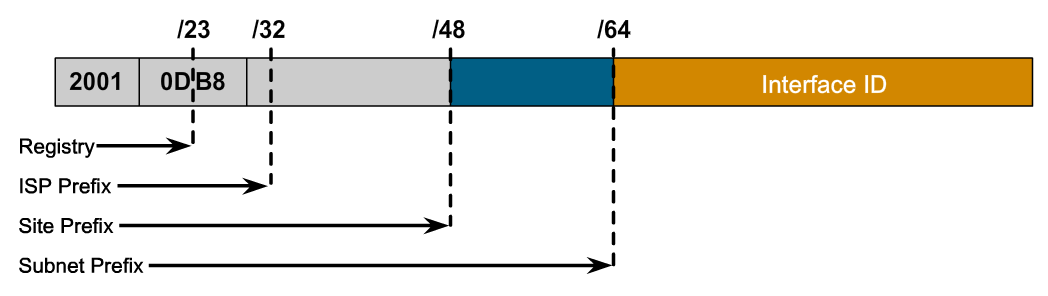

So, the next problem is getting the MaxMind data into Postgres. Getting the two tables into Postgres is easy, using the copy command. The challenge is IPV6 versus IPV4 addresses. The data is in IPV6 format with a subnet mask to represent ranges. Most of us who are familiar with IP addresses are familiar with IPV4 addresses. These are 32 bits and look something like this: 173.194.121.17 (this happens to be an address for www.google.com attained by running ping www.google.com in a terminal window). Alas, the data from MaxMind uses IPV6 values rather than IPV4.

In IPV6, the above would look like: ::ffff:173.194.121.17 (to be honest, this is a hybrid format for representing IPV4 addresses in IPV6 address space). And the situation is a bit worse, because these records contain address ranges. So the address range is really: ::ffff:173.194.0.0/112.

The "112" is called a subnet mask. And, IPV4 also uses them. In IPV4, they represent the initial range of digits, so they range from 1-32, with a number like "24" being very common. "112" doesn't make sense in a 32-bit addressing scheme. To fast forward, the "112" subnet mask for IPV6 corresponds to 16 in the IPV4 world. This means that the first 16 bits are for the main network and the last 16 bits are for the subnet. That is, the addresses range from 173.194.0.0 to 173.194.255.255. The relationship between the subnet mask for IPV6 and IPV4 is easy to express: the IPV4 subnet mask is the IPV6 subnet mask minus 96.

I have to credit this blog for helping me understand this, even though it doesn't give the exact formula. Here, I am going to shamelessly reproduce a figure from that blog (along with its original attribution):

The Postgres code for creating the table then goes as follows:

create table ipcity_staging (

network_start_ip varchar(255),

network_mask_length int,

geoname_id int,

registered_country_geoname_id int,

represented_country_geoname_id int,

postal_code varchar(255),

latitude decimal(15, 10),

longitude decimal(15, 10),

is_anonymous_proxy int,

is_satellite_provider int

);

copy public.ipcity_staging

from '...data/MaxMind IP/GeoLite2-City-CSV_20140401/GeoLite2-City-Blocks.csv'

with CSV HEADER;

create table ipcity (

IPCityId serial not null,

IPStart int not null,

IPEnd int not null,

IPStartStr varchar(255) not null,

IPEndStr varchar(255) not null,

GeoNameId int,

GeoNameId_RegisteredCountry int,

GeoNameId_RepresentedCountry int,

PostalCode varchar(255),

Latitude decimal(15, 10),

Longitude decimal(15, 10),

IsAnonymousProxy int,

IsSatelliteProvider int,

unique (IPStart, IPEnd),

unique (IPStartStr, IPEndStr)

);

insert into ipcity(IPStart, IPEnd, IPStartStr, IPEndStr, GeoNameId, GeoNameId_RegisteredCountry, GeoNameId_RepresentedCountry,

PostalCode, Latitude, Longitude, IsAnonymousProxy, IsSatelliteProvider

)

select IPStart, IPEnd, IPStartStr, IPEndStr, GeoName_Id, registered_country_geoname_id, represented_country_geoname_id,

Postal_Code, Latitude, Longitude, Is_Anonymous_Proxy, Is_Satellite_Provider

from (select network_mask_length - 96,

hostmask(inet (substr(network_start_ip, 8) || '/' || network_mask_length - 96)) ,

inet(host(inet (substr(network_start_ip, 8) || '/' || network_mask_length - 96) )) |

hostmask(inet (substr(network_start_ip, 8) || '/' || network_mask_length - 96)

) as ipend_inet,

substr(network_start_ip, 8) || '/' || network_mask_length - 96,

((split_part(IPStartStr, '.', 1)::int << 24) +

(split_part(IPStartStr, '.', 2)::int << 16) +

(split_part(IPStartStr, '.', 3)::int << 8) +

(split_part(IPStartStr, '.', 4)::int)

) as IPStart,

((split_part(IPEndStr, '.', 1)::int << 24) +

(split_part(IPEndStr, '.', 2)::int << 16) +

(split_part(IPEndStr, '.', 3)::int << 8) +

(split_part(IPEndStr, '.', 4)::int)

) as IPEnd,

st.*

from (select st.*,

host(inet (substr(network_start_ip, 8) || '/' || network_mask_length - 96)) as IPStartStr,

host(inet(host(inet (substr(network_start_ip, 8) || '/' || network_mask_length - 96) )) |

hostmask(inet (substr(network_start_ip, 8) || '/' || network_mask_length - 96))

) as IPEndStr

from ipcity_staging st

where network_start_ip like '::ffff:%'

) st

) st;