Saturday, March 1, 2014

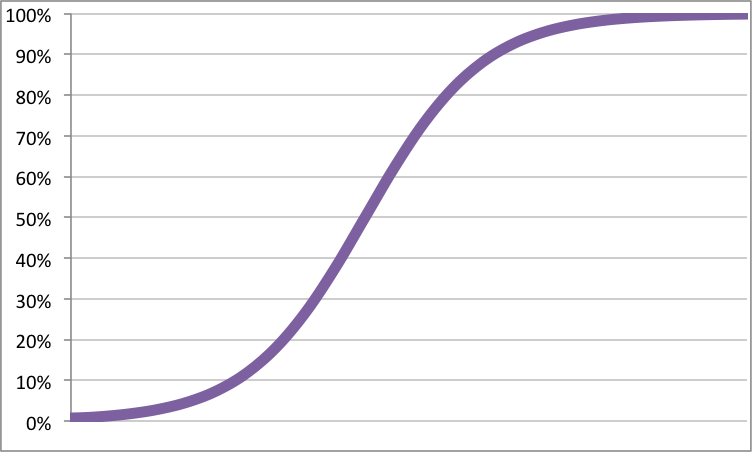

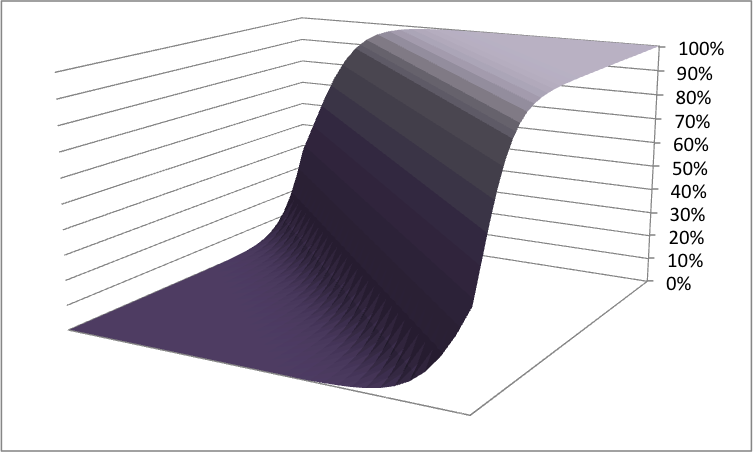

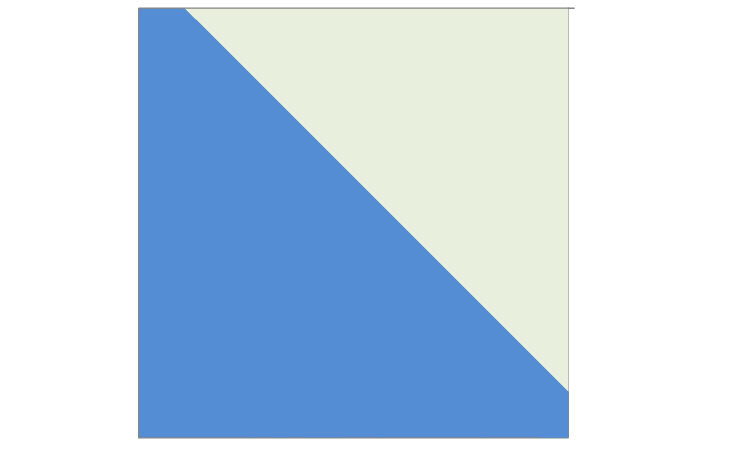

Lines and Circles and Logistic Regression

Wednesday, February 26, 2014

Taking a Random Sample on Amazon Redshift

Columnar databases have interesting performance characteristics, because the database stores each column separately from other columns. Although generally bad performance-wise for ACID-compliant transactions (if you don't know what ACID is, then you don't need to know), columnar databases are good for analysis.

However, your intuition about how things work may not apply. A seemingly simple query such as this:

takes a relatively long time (several minutes) because all the columns have to be read independently. On the other hand, a query such as:

select min(BrowserId), max(BrowserId)

from PageViews;

Goes quite fast (a few seconds), because only one column has to be read into memory. The more columns the queries reads, the slower it is -- other things being equal.

Back to the random sample. A typical way of getting this type of random sample is to first find the reduced set of visitors and then join them back to the full page views. This sounds cumbersome, but the strategy actually works well on many databases. Applied to the query we were working with, the resulting query looks something like:

select pv.BrowserId,

from (select distinct BrowserId

from PageViews

order by random()

limit 100000

) list join

PageViews pv

on list.BrowserId = pv.BrowserId

group by BrowserId;

This is a reasonable and standard approach to reduce the processing overhead. The subquery list produces all the BrowserIds and then sorts them randomly (courtesy of the random() function). The limit clause then takes a sample of one hundred thousand (out of many tens of millions). The join would normally use an indexed key, so it should go pretty fast. On Redshift, the subquery to get list performs relatively well. But the entire query did not finish (our queries time out after 15-30 minutes). We experimented with a several variations, to no avail.

What finally worked? Well, a much simpler query and this surprised us. The following returned in just a few minutes:

select BrowserId,

from PageViews pv

group by BrowserId

order by random()

limit 100000;

In other words, doing the full aggregation on all the data and then doing the sorting is actually faster than trying to speed up the aggregation by working on a subset of the data.

I've been working with parallel databases for over twenty years. I understand why this works better than trying to first reduce the size of the data. Nevertheless, I am surprised. My intuition about what works well in databases can be inverted when using parallel and columnar databases.

One of Vicky's requirements was for a repeatable random sample. That means that we can get exactly the same sample when running the same query again. The random() function does not provide the repeatability. In theory, by setting the seed, it should. In practice, this did not seem to work. I suspect that aspects of load balancing in the parallel environment cause problems.

Fortunately, Postgres supports the md5() function. This is a hash function that converts a perfectly readable string into a long string containing hexadecimal digits. These digits have the property that two similar strings have produce very different results, so this is a good way to randomize strings. It is not perfect, because two BrowserIds could have the same hash value, so they would always be included or excluded together. But, we don't need perfection; we are not trying to land a little Curiousity lander in a small landing zone on a planet tens of millions of miles away.

The final form of the query was essentially:

select BrowserId,

from PageViews pv

group by BrowserId

order by md5('seed' || BrowserId)

limit 100000;

Thursday, November 14, 2013

What will I do on my Caribbean vacation? Teach data mining, of course!

Wednesday, September 25, 2013

For Predictive Modeling, Big Data Is No Big Deal

Sunday, October 21, 2012

Catch our Webcast on November 15

Tuesday, September 11, 2012

Upcoming Speaking Engagements

This Friday (9/14) I will be at Big Data Innovation talking about how Tripadvisor for Business models subscriber happiness and what we can do to improve a subscriber's probability of renewal.

On October 1 and 2 I will be at Predictive Analytics World in Boston. This has become my favorite data mining conference. On the Monday, I will be visiting with my friends at JMP and giving a sponsored talk about how we use JMP for cannibalization analysis at Tripadvisor for Business. On Tuesday, I will go into the details of that analysis in more detail in a regular conference talk.

Sunday, March 11, 2012

Measuring Site Engagement: Pages or Sessions

A media web site is challenging, because there is no simple definition of engagement or customer worth. The idea is that engagement can either lead to more advertising views or to longer subscriptions, depending on the business model for the site. On the other hand, for a retailing site, the question is simpler, because there is a simple method to see who the best customers are. Namely, the amount of money they spend.

Engagement is a nice marketing concept, but how can it be defined in the real world? One way is to simply look at the number of page views during some period of time. Another is to look at the number of sessions (or alternatively days of activity if sessions are not available) during a specified period of time. Yet another is to measure breadth of usage of the site over a period of time: Does the user only go to one page? Is the user only coming in on referrals from Google?

The first analysis used one month of data to define engagement. The top users for one month were determined based on pages and sessions. Of course, there is a lot of overlap between the two groups -- about 60% of the top deciles overlapped.

Which group seems better for defining engagement, the top users by page views or by sessions? To answer this, let's borrow an idea from survival and measure how many users are still around nine months later. (Nine months is arbitrary in this case). In this case, the return rate for the top decile for sessions was 74.4% but for the top decile for pages was lower at 73.8%. Not a big difference, but one that suggests that sessions are better.

Actually, the results are even more striking for visitors who are not in both top deciles. For the non-overlapping group, the session return rate is69.6% versus 67.9% for the page deciles.

For defining engagement, we then extended these results to three months instead of one to find the top one million most engaged users. The three measures are:

- Visitors that have the most page views over three months.

- Visitors that have the most sessions over three months.

- Visitors in the top tercile of sessions (third) in each month, then take the highest terciles.

Three months was chosen as a rather arbitrary length of time, because the data was available. Holding it constant also lets us understand the difference between sessions and page views.

These three methods all produced about the same number of visitors -- the goal was to find the top one million most engaged users.

By these measures, the top one million visitors chosen by the three methods had the following "return" rates, nine months later:

- Page views in three months: 65.4%

- Sessions in three months: 65.9%

- Sessions over three months: 66.9%

The nine-month survival suggests that the sessions over three months is the better approach for measuring engagement.