Last week, a book -- a real, hard-cover paper-paged book -- arrived in the mail with the title: Heuristics in Analytics: A Practical Perspective of What Influences Our Analytic World. The book wasn't a total surprise, because I had read some of the drafts a few months ago. One of the authors, Fiona McNeill is an old friend and the other Carlos is a newer friend.

What impressed me about the book is its focus on the heuristic (understanding) side of analytics rather than the algorithmic or mathematical side of the subject. Many books that attempt to avoid technical detail end up resembling political sound-bites: any substance is as lost as the figures in a Jackson Pollock painting. You can peel away the layers, and still nothing shows up except eventually for a blank canvas.

A key part of their approach is putting analytics in the right context. Their case studies do a good job of explaining how the modeling process fits into the business process. So, a case study on collections discusses different models that might be used, answering questions such as:

What impressed me about the book is its focus on the heuristic (understanding) side of analytics rather than the algorithmic or mathematical side of the subject. Many books that attempt to avoid technical detail end up resembling political sound-bites: any substance is as lost as the figures in a Jackson Pollock painting. You can peel away the layers, and still nothing shows up except eventually for a blank canvas.

A key part of their approach is putting analytics in the right context. Their case studies do a good job of explaining how the modeling process fits into the business process. So, a case study on collections discusses different models that might be used, answering questions such as:

- How long until someone will pay a delinquent bill?

- How much money can likely be recovered?

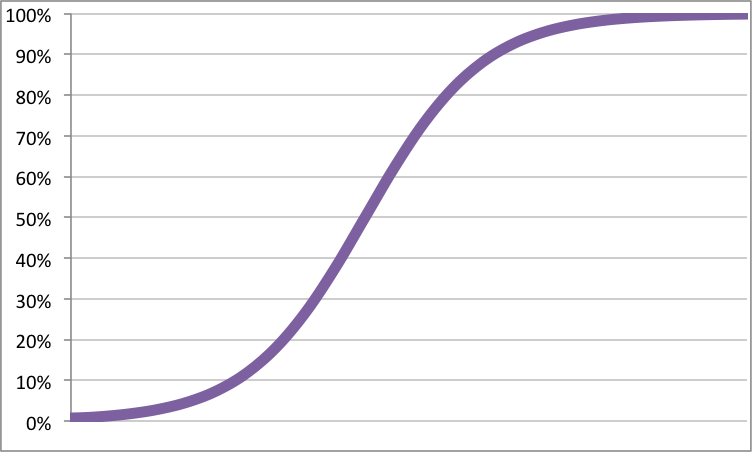

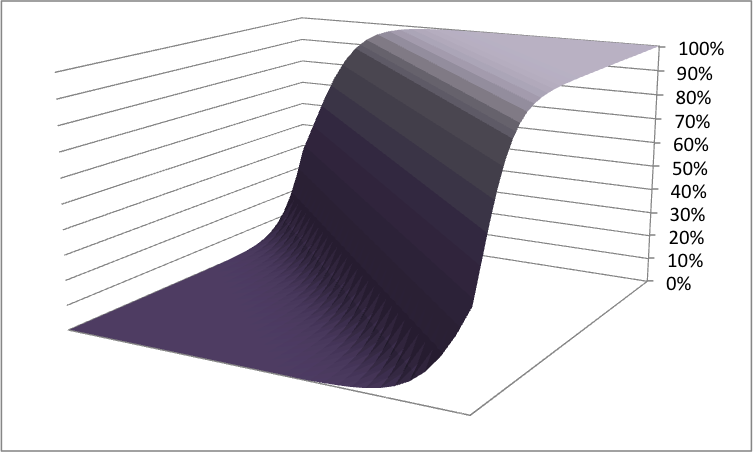

This particular example goes through multiple steps around the business process, including financial calculations on how much the modeling is actually worth. It also goes through multiple types of models, such as a segmentation model (based on Kohonen networks) and the differences -- from the business perspective -- of the different segments. Baked into the discussion is how to use such models and how to interpret the results.

In such a fashion, the book covers most of the common data mining techniques, along with special chapters devoted to graph analysis. This is particularly timely, because graphs are a very good way to express relationships in the real world.

I do wish that the data used for some of the examples in the book were available. They would make some very interesting examples.