One of the claims that they make is:

To draw an analogy to SQL, map is like the group-by clause of an aggregate query. Reduce is analogous to the aggregate function (e.g., average) that is computed over all the rows with the same group-by attribute.

This claim is worth discussing in more detail because it is a very powerful and intuitive analogy. And, it is simply incorrect. MapReduce is much more powerful than SQL aggregations.

Another reason why I find their claim interesting is because I use the same analogy to describe MapReduce to people familiar with databases. Let me explain this in a bit more detail. Consider the following SQL query to count the number of customers who start in each month:

SELECT MONTH(c.start_date), COUNT(*)

FROM customer c

GROUP BY MONTH(c.start_date)

Now, let's consider how MapReduce would "implement" this query. Of course, MapReduce is a programming framework, not a query interpreter, but we can still use it to solve the same problem. The MapReduce framework solves this problem in the following way:

First, the map phase would read records from the customer table and produce an output record with two parts. The first part is called the "key", which is populated with MONTH(c.start_date). The second is the "value", which can be arbitrarily complicated. In this case, it is as simple as it gets. The value part simply contains "1".

The reduce phase then reads the key-value pairs, and aggregates them. The MapReduce framework sorts the data so records with the same key always occur together. This makes it easy for the reduce phase to add together all the "1"s to get the count for each key (which is the month number). The result is a count for each key.

I am intentionally oversimplified this process by describing it at a high level. The first simplification is leaving out all the C++ or Java overhead for producing the programs (although there are attempts at interpreted languages to greatly simplify this process). Another is not describing the parallel processing aspects. And yet another oversimplification is leaving out the "combine" step. The above algorithm can be made more efficient by first "reducing" the values locally on each processor to get subtotals, and then "reducing" these again. This post, however, is not about computational efficiency.

The important thing to note is the following three correspondences between MapReduce and SQL aggregates.

- First, MapReduce uses a "key". This key is the GROUP BY expression in a SQL aggregation statement.

- Second, MapReduce has a "map" function. This is the expression inside the parentheses. This can be an arbitrary function or CASE statement in SQL. In databases that support user defined functions, this can be arbitrarily complicated, as with the "map" function in MapReduce.

- Third, MapReduce has a "reduce" function. This is the aggregation function. In SQL, this is traditionally one of a handful of functions (SUM(), AVG(), MIN(), MAX()). However, in some databases support user defined aggregation functions, which can be arbitrarily complicated.

Wrong!

The next example extends the previous one by asking how many customers start and how many stop in each month. There are several ways of approaching this. The following shows one approach using a FULL OUTER JOIN:

SELECT m, ISNULL(numstarts, 0), ISNULL(numstops, 0)

FROM (SELECT MONTH(start_date) as m, COUNT(*) as numstarts

......FROM customer c

......GROUP BY MONTH(start_date)

.....) start FULL OUTER JOIN

.....(SELECT MONTH(stop_date) as m, COUNT(*) as numstops

......FROM customer c

......GROUP BY MONTH(stop_date)

.....) stop

.....ON start.m = stop.m

Another formulation might use an aggregation and UNION:

SELECT m, SUM(isstart), SUM(isstop)

FROM ((SELECT MONTH(start_date) as m, 1 as isstart, 0 as isstop

.......FROM customer c)

......UNION ALL

........(SELECT MONTH(stop_date) as m, 0 as isstart, 1 as isstop

........FROM custommer c)) a

GROUP BY m

Now, in both of these, there are two pieces of processing, one for the starts and one for the stops. In almost any databases optimizer that I can think of, both these queries (and other, similar queries) require two passes through the data, one pass for the starts and one pass for the stops. And, regardless of the optimizer, the SQL statements describe two passes through the data.

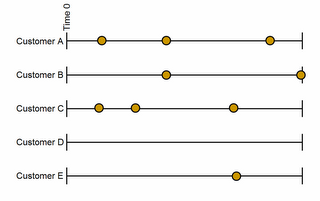

The MapReduce framework has a more efficient, and perhaps, even more intuitive solution. The map phase can produce two output keys for each record:

- The first has a key that is MONTH(start_date) and the value is a structure containing isstart and isstop with values of 1 and 0 respectively.

- The second has a key that is MONTH(stop_date) and the value are 0 and 1 respectively.

How much better becomes apparent when we look in more detail at what is happening. When processing data, SQL limits us to one key per record for aggregation. MapReduce does not have this limitation. We can have as many keys as we like for each record.

This difference is particularly important when analyzing complex data structures to extract features -- of which processing text from web pages is an obvious example. To take another example, one way of doing content filtering of email for spam involves looking for suspicious words in the text and then building a scoring function based on those words (naive Bayesian models would be a typical approach).

Attempting to do this in MapReduce is quite simple. The map phase looks for each word and spits out a key value pair for that word (in this case, the key is actually the email id combined with the word). The reduce phase counts them all up. Either the reduce phase or a separate program can then apply the particular scoring code. Extending such a program to include more suspicious words is pretty simple.

Attempting to do this in SQL . . . well, I wouldn't do it in the base language. It would be an obfuscated mess of CASE statements or a non-equi JOIN on a separate word table. The MapReduce approach is simpler and more elegant in this case. Ironically, I usually describe the problem as "SQL is not good in handling text." However, I think the real problem is that "SQL aggregations are limited to one key per record."

SQL has many capabilities that MapReduce does not have. Over time, MapReduce may incorporate many of the positive features of SQL for analytic capabilities (and hopefully not incorporate unneeded overhead for transactions and the like). Today, SQL remains superior for most analytic needs -- and it can take advantage of parallelism without programming. I hope SQL will also evolve, bringing together the enhanced functionality from other approaches.

--gordon